Why MedTech Companies Need SOPs for AI Consultants and Tools

Applying 21 CFR Part 820.50 Purchasing Controls to the Age of Artificial Intelligence Artificial Intelligence is rapidly transforming the MedTech industry, from design and quality systems to manufacturing analytics and post-market surveillance. But as AI moves from curiosity to operational reality, a new compliance blind spot has emerged: the lack of formal supplier controls for AI consultants, developers, and tools.

RESPONSIBLE AI & GOVERNANCE

Manfred Maiers

10/13/20253 min read

Applying 21 CFR Part 820.50 Purchasing Controls to the Age of Artificial Intelligence

Artificial Intelligence is rapidly transforming the MedTech industry, from design and quality systems to manufacturing analytics and post-market surveillance. But as AI moves from curiosity to operational reality, a new compliance blind spot has emerged: the lack of formal supplier controls for AI consultants, developers, and tools.

In an industry governed by 21 CFR Part 820, every external service provider, from sterilization vendors to software developers, must operate under clearly defined purchasing controls. AI should be no exception.

The Regulatory Backbone: 21 CFR Part 820.50

21 CFR Part 820.50 requires medical device manufacturers to “establish and maintain procedures to ensure that all purchased or otherwise received product and services conform to specified requirements.”

In practical terms, this means:

Evaluating and qualifying suppliers based on their ability to meet regulatory and quality requirements,

Establishing quality agreements and documented expectations,

And verifying deliverables before use in regulated systems.

When applied to AI services, these expectations must evolve but not disappear. Instead of sterilization certificates or calibration records, MedTech organizations must now assess:

The AI consultant’s understanding of regulated industry frameworks (FDA QMSR, ISO 13485, MDR, HIPAA)

The transparency and traceability of the AI tools and datasets used.

And the security and location of the underlying infrastructure, especially when it involves cloud or large language model (LLM) access.

The New Category of “AI Service Suppliers”

AI consultants, data science vendors, and generative AI platforms are now effective suppliers, and must be treated as such under the quality system.

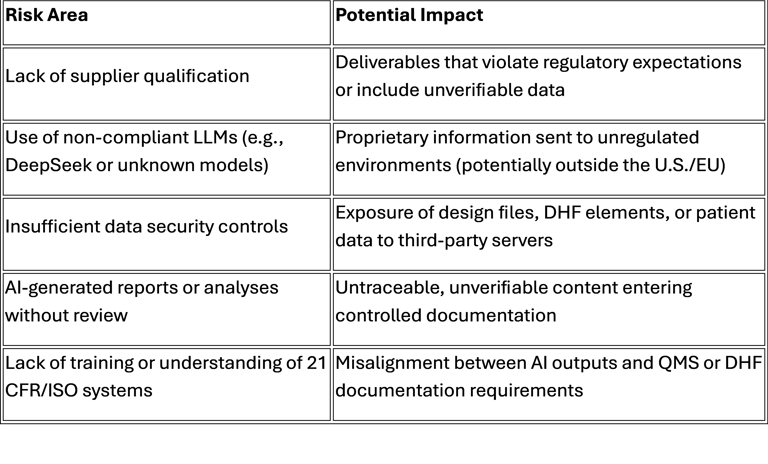

Yet, few MedTech organizations apply the same rigor they use for contract manufacturers or test labs. This gap creates major risks:

Lessons from the AI Policy Framework

In our earlier discussion on AI Policies and Training, we drew parallels between AI use and cybersecurity. The same thinking applies here, except now, the risk lies outside your walls.

Key AI Policy principles that must extend to external partners include:

Approved platforms only: Vendors must use company-approved, validated AI environments.

Data protection: No proprietary or patient data can be entered into public AI tools.

Transparency: Vendors must document which models or tools were used for deliverables.

Accountability: The manufacturer stays ultimately responsible for the content integrated into regulated systems.

When these principles are not part of formal SOPs or supplier agreements, compliance breaks down.

What an AI Supplier SOP Should Include

A robust Standard Operating Procedure for AI consultants and tools should mirror traditional purchasing controls, adapted for digital and AI-specific risks.

1. Supplier Qualification

Assess the consultant’s understanding of MedTech regulations, data integrity principles, and QMS alignment.

Require documentation of AI model origin, ownership, and data training sources.

Evaluate the security and authority of any cloud or LLM infrastructure.

2. Supplier Agreement / Statement of Work (SOW)

Include clauses specifying data ownership, confidentiality, and use restrictions.

Require vendors to use only company-approved AI accounts or controlled environments.

Define expectations for traceability of AI-assisted outputs (e.g., flagged sections, model references).

3. Verification of Deliverables

Establish a review process for all AI-generated reports, models, or insights before inclusion in controlled documentation.

Verify that no sensitive, personal, or proprietary data was exposed in the process.

Require a validation record when deliverables are integrated into product development or quality processes.

4. Data Security and Location Control

Restrict the use of any LLMs hosted in non-trusted authorities (e.g., outside the U.S., EU, or other compliant data protection zones).

For example, the Chinese-based DeepSeek model, while powerful, raises questions of data sovereignty and IP security.

Mandate cloud vendor security documentation (SOC2, ISO 27001, HIPAA alignment).

The New Audit Question

Tomorrow’s auditors won’t just ask:

“How do you qualify your component suppliers?”

They’ll ask:

“How do you qualify your AI suppliers and ensure their tools and deliverables comply with 21 CFR Part 820?”

And when they do, companies with documented AI supplier SOPs will prove control, while others scramble to explain informal relationships and undocumented AI deliverables.

Turning Compliance into Competitive Advantage

By formalizing AI supplier controls, MedTech companies gain more than compliance:

Trust: Regulators and customers will see clear accountability and transparency.

Consistency: Controlled AI use means consistent quality in reports, analytics, and automation.

Confidence: Employees and partners know how to use AI safely and effectively.

AI doesn’t replace regulatory rigor; it demands it. Purchasing controls must evolve from hardware and services to digital intelligence.

Because in MedTech, compliance isn’t just about checking a box. It’s about protecting patients, innovation, and trust, even in the age of AI.

✅ Next Step:

If your organization uses AI consultants or generative AI platforms without formal SOPs under 21 CFR 820.50, it’s time to act. Define the process. Document the controls. Train the team.

Let’s make AI safe, secure, and compliant, by design.

Author: Manfred Maiers Principal Consultant, Founder, NoioMed | MedTech Operations, Regulatory Compliance & AI Integration Helping MedTech leaders design AI roadmaps and compliant operational frameworks that accelerate performance; without compromising trust.