Auditing the Machines: Are Supplier Auditors Ready for the AI Era?

Why Traditional Supplier Audits May Miss the Most Modern Risk - AI Use and Cross-Client Contamination

THE LEARNING LOOPRESPONSIBLE AI & GOVERNANCE

Manfred Maiers

11/9/20257 min read

Introduction: The New Blind Spot in Supplier Oversight

Supplier audits have long been the cornerstone of quality assurance and regulatory compliance in MedTech and advanced manufacturing. They’re how we verify that suppliers follow procedures, maintain traceability, and uphold the same standards we live by.

But as suppliers increasingly adopt Artificial Intelligence to help with documentation, quality analytics, and process optimization, a silent shift has begun, one that most audit programs are not yet prepared to detect.

Auditors are trained to verify calibration records, training files, CAPA logs, and risk management systems.

But how many are trained to recognize an AI model that remembers across clients, or to question whether a supplier’s “analytics assistant” could be reusing data from multiple customers?

As AI systems become embedded in supplier workflows, cross-information contamination, unvalidated AI tools, and data reuse are emerging as blind spots, unseen, unaudited, and unmitigated.

In this article, we’ll explore how supplier audits must evolve to meet this new challenge, and why every MedTech manufacturer needs to add AI literacy to its auditing toolkit.

1. The Expanding Landscape of Supplier AI

Five years ago, few suppliers would have mentioned AI in an audit. Today, many use it daily.

AI has quietly crept into core manufacturing and quality systems, often without explicit visibility at the corporate level.

Common AI Applications in Supplier Operations

Quality & CAPA: AI tools summarize investigations, classify root causes, and draft containment actions.

Process Analytics: Machine learning algorithms analyze SPC data and predict yield issues.

Design for Manufacturability (DFM): Generative AI assists in improving part geometry or assembly sequences.

Documentation: AI-driven systems draft SOPs, PFMEA updates, or inspection summaries.

Training and Work Instructions: Generative tools create step-by-step visual work instructions.

Each of these applications may involve client data, drawings, NCR records, process parameters, being uploaded into an AI environment.

And when those systems are shared across multiple clients or projects, data separation becomes a governance problem.

In short: AI is now a quality tool, but one with memory, and that changes everything.

2. What Auditors Are Missing

Traditional supplier audits were designed for a paper-based or digital QMS world, not for systems that think, learn, and remember.

An auditor’s standard approach might verify:

Documentation control

CAPA effectiveness

Equipment validation

Training records

Calibration and maintenance

But few audit checklists currently include:

How AI models are validated or governed

Whether AI systems are multi-tenant or client-specific

Where embeddings, training data, or prompts are stored.

How suppliers ensure confidential client data isn’t reused by AI tools

Even the best auditors may walk through a supplier using AI every day and never realize it.

The risk isn’t negligence; it’s knowledge gap. AI governance simply isn’t part of the standard auditor curriculum yet.

3. The Hidden Layer in the Audit Trail

To understand the risk, we must first recognize that modern AI systems don’t behave like ordinary software.

When a supplier uploads your defect logs, drawings, or process data into an AI tool, several things can happen behind the scenes:

a) Data Embeddings Are Created

Your information is transformed into numerical vectors stored in a vector database. Unless these databases are isolated per client, your data may coexist alongside other customers’ data.

b) AI Models Learn or Retain Patterns

Even when the supplier deletes the original files, fine-tuning or retrieval training can leave residual traces of your data in the model’s parameters.

c) Prompt Logs Are Saved

AI systems often log every user prompt and output. If a supplier uses one shared workspace for multiple clients, those logs might intermix confidential data.

d) Outputs Can Be Reconstructed

Because AI tools are generative, they can unintentionally reproduce phrases, numbers, or patterns from earlier sessions.

To an auditor, everything looks fine: controlled processes, validated software, approved procedures.

But beneath the surface, a supplier’s AI might be quietly blending knowledge between clients.

4. Why MedTech and Regulated Manufacturing Are Especially Vulnerable

In MedTech, supplier relationships are built on confidentiality and traceability.

Every drawing, test record, and CAPA file may be governed by FDA 21 CFR Part 820, ISO 13485, or an NDA.

When AI becomes part of that data flow, compliance boundaries blur.

Specific Vulnerabilities

Regulated Data in Unregulated Tools: AI applications used for root-cause summaries or validation reports may not be covered under the supplier’s QMS.

Multi-Client AI Environments: A supplier’s internal AI may process data for several OEMs simultaneously.

Loss of Traceability: It becomes difficult to prove which data informed which AI-generated output.

Intellectual Property Risk: Proprietary process knowledge could be encoded into shared AI models.

In FDA or ISO terms, this introduces both data integrity and supplier qualification concerns.

And yet, current audit frameworks rarely address them.

5. Why Auditors Aren’t Catching It

There are four systemic reasons supplier audits miss AI-related risks:

1. Training Gap

Auditors are rarely trained in AI fundamentals, vector databases, model fine-tuning, or data segregation aren’t part of ISO 13485 auditor courses.

2. No AI-Specific Audit Checklist

Audit templates focus on traditional QMS elements, design control, CAPA, traceability, not AI usage or governance.

3. Invisible Systems

AI tools can be embedded in spreadsheets, cloud analytics platforms, or internal assistants. Unless an auditor asks, they’re often invisible.

4. Regulatory Lag

Neither the FDA’s QMSR transition nor ISO 13485’s current text directly addresses AI systems used by suppliers outside of product design or clinical evaluation.

The result: auditors are inspecting the visible 80% of the supplier process, while the invisible 20%, the AI layer, operates without scrutiny.

6. What AI-Related Nonconformance Could Look Like

Imagine a few realistic audit findings that might arise if auditors knew what to look for:

Supplier uses ChatGPT Enterprise to summarize CAPA data. No documented controls over prompt logging, data residency, or deletion.

Contract manufacturers train an internal AI for yield optimization using data from multiple OEMs. No evidence of client consent or segregation.

Supplier’s quality engineer uses a personal AI assistant to draft investigation reports with confidential client data. No record of tool validation or approval.

AI-generated documents found in QMS (e.g., SOP drafts, PFMEA edits) without revision control or traceability to source data.

These are not far-fetched examples. In 2024, several large suppliers in the electronics and MedTech sectors were asked by customers to stop using generative AI tools until they could document governance controls.

7. Modernizing the Supplier Audit Framework

To stay ahead of these risks, manufacturers and auditors must adapt their audit frameworks to explicitly address AI use.

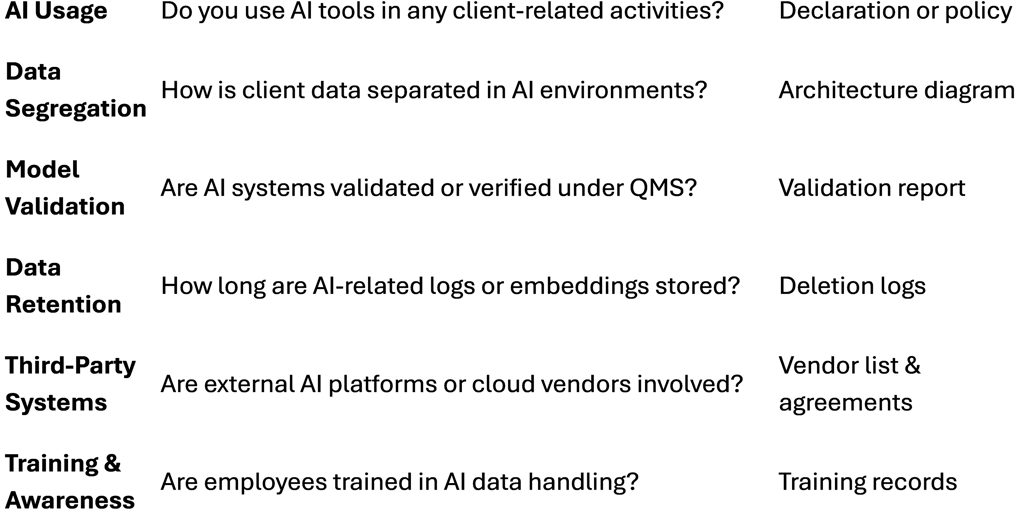

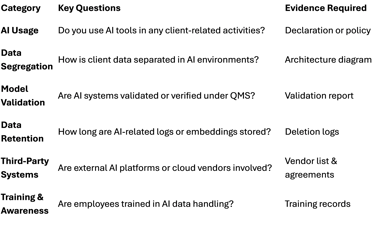

a) Add AI to Audit Checklists

Introduce a new section in supplier audit forms:

Does the supplier use AI in quality, design, or analytics?

Are AI systems validated or approved under their QMS?

Is client data used to train or fine-tune any models?

How is data segregated between clients?

What are the supplier’s AI data retention and deletion policies?

b) Train Auditors in AI Fundamentals

Auditors don’t need to become data scientists, but they do need literacy in:

AI architecture basics (fine-tuning vs. retrieval)

How embeddings and vector stores work

Data segregation and multi-tenancy concepts

How to verify deletion, consent, and access controls

c) Verify Validation and Change Control

If AI influences quality or regulatory outputs, it must be validated like any other software:

Documented requirements and intended use.

Verification and validation testing

Change control and re-validation triggers.

d) Request Supplier AI Declarations

Ask suppliers to provide an AI Use Declaration, similar to a software validation record, covering:

AI tools or models used in service delivery.

Data sources and segregation methods

Model ownership and update frequency.

Compliance with confidentiality agreements

e) Include AI in Contractual Language

Update supplier agreements and NDAs to:

Define “confidential information” as including prompts, embeddings, and AI outputs.

Prohibit cross-client model reuse.

Require written approval for any AI fine-tuning using client data.

Grant audit rights over AI-related systems.

8. Preparing Auditors for the Future

The next evolution of auditing won’t be about catching AI misuse; it will be about governing it responsibly.

Training the New Auditor

Forward-thinking companies are beginning to add AI governance modules to their auditor training programs:

How to identify ungoverned AI tools in use.

How to interview staff about AI workflows.

How to interpret logs, model documentation, or AI risk assessments.

How to evaluate third-party AI vendors as part of supplier qualification.

The future “digital quality auditor” must be as fluent in AI governance as they are in CAPA or risk management.

9. Integrating AI Risk into Supplier Qualification

Supplier qualification forms (SQFs) and audit templates should now include an AI Risk Section:

Adding this section not only strengthens compliance, but it also signals to suppliers that your organization takes AI governance seriously.

10. The Regulator’s Horizon

Regulators are not far behind.

In 2024, the European Commission’s AI Act formally categorized general-purpose AI systems as “high risk” when used in critical industries like healthcare.

The FDA, meanwhile, has been developing frameworks for AI/ML-enabled medical devices, and those principles will likely extend to manufacturing systems.

Expect regulators to soon ask:

“Does your supplier use AI in any part of the production or quality process?”

“How do you ensure AI models comply with data integrity and validation requirements?”

When that happens, organizations that have already built AI-aware audit programs will be far ahead of the curve.

11. The Human Parallel

A good auditor has always needed both technical knowledge and intuition, the ability to read between the lines.

AI changes the line itself.

In the same way that human consultants must separate client knowledge ethically, auditors must ensure machines do the same technically.

It’s no longer enough to ask, “Is your data secure?”

We must now ask, “Does your AI remember, and if so, what?”

12. The Call to Action: Build AI-Aware Audit Capability

For MedTech manufacturers and other regulated industries, the next twelve months are critical.

Before your next supplier audit cycle:

Update audit templates to include AI governance questions.

Train internal and external auditors on AI fundamentals.

Require AI usage declarations from key suppliers.

Integrate AI risk into your supplier management system.

Align your contracts, NDAs, and QMS procedures with Responsible AI principles.

Audits are only as strong as the questions they ask.

And in the age of intelligent machines, it’s time we start asking smarter questions.

Conclusion: Auditing the Invisible

The era of “AI-enabled suppliers” is here, but our audit systems are still looking backward.

Until supplier audits evolve to address AI use, cross-client contamination, and data retention, the biggest risks will remain invisible.

The next generation of audits must see beyond binders and checklists, into the digital substrate of how suppliers think, analyze, and remember.

Because in the end, the integrity of your supply chain doesn’t just depend on how your partner’s work.

It depends on how their machines learn.